Inhaltsverzeichnis

Modelling foveated vision in Matlab image processing

VisPep 2016 conference talk, Riga, LV

Abstract

The vision system uses spatially and temporally discrete data to support a view of the environment which is faithful enough to enable physical interaction. Thus, for most situations, correct metric information about the physical world is necessary. The resolution of the eye is spatially highly inhomogeneous, as the maximum resolution is achieved only in a small part of the retina called fovea. Outside of the fovea, the resolution decays almost logarithmically. A sophisticated system to change the gaze quickly between the points of interest is needed consisting of precise saccades to new targets and the ability to combine information gathered from several saccade targets in some form. In the visual cortex, the data provided from the retina are synthesized again for further processing but also in a manner where most of the area is allocated for the part of the virtual image plane near the fovea; moreover, the image is split between the brain halves. Very likely, faithful modeling of the essential inhomogeneities is the key to understanding of some optical illusions which look like aliasing artefacts caused by bad matching of sampling density and spatial frequency content of the processed signal. The main example of this type of illusion are variations of the Hermann grid, and specifically, the scintillating grid. After construction of nets of usable sampling points for the retina and the visual cortex, it is seen that even simple concepts such as a „straight line“ have no priori meaning on such data. Some active work is needed to calibrate data on an inhomogeneous sampling regime to be mapped to the Euclidean geometry we are used to. From a mathematical viewpoint, this is best done by transforming the data to frequency domain, doing the shifts needed to combine data over saccades by the usual phase multiplication there, and back again to the space representation. In Matlab language, the necessary data structures and operators are constructed to resample and combine data from several fixations with intervening saccades. The only reliable source for the metrics of these operators are the sample changes induced by eye movements. It is concluded that the calibration task may be a possible cause for the abundance of otherwise useless microsaccades.

Foveate vision

The retinae of the eyes contain various types of light sensitive cells. For this talk we only consider the „cone“s and do not differentiate between the cone-types which deliver the colour preception. We just note that the distribution of these cells on the retina area is highly inhomogogeneous: most densely in a small region „foveola“ (angle of half degree diameter), where only L- and M-cones are arranged in hexagonal packing, slowly decreasing for the rest of fovea (5 degree diameter), and further decreasing to the full visual field of about 70 degrees or more. There is even a blind spot at the place where the optical nerve crosses from the inside outwards.

In order to get the perception that the world is seen as it is, eye movements are used to scan the visual field for more detailed inspection. About 3 times per second new fixations are made, and the very fast eye movements between them are called „saccades“. This design leads to serious consequences for the mathematics to deal with. It is obvious that in some part of the brain a place must be provided where the information from the pre-saccade state with the data from the new fixation takes place. This „state space“ should be able to contain a lot more of information than the retinae can deliver, in linear algebra language, the perception state space has a much larger dimension than the retina space.

This difference in dimension leads to some „optical illusions“, because for undersampled data several interpretations are possible, and this may cause an effect called „aliasing“. Most people have seen coach wheels turning backwards in old movies caused by the interference of sample rate in time and frequency of the spoke rotation. The scintillating variant of the Hermann grid is looking like an aliasing phenomenon, but it is not immediately clear, what features are aliased there.

Matlab programs and scripts

To investigate this in concrete algorithms and to communicate the findings, I developed some functions and scripts in Matlab language, usable either in Matlab or GNU Octave. They are publically available on http://pkeus.de/Vision together with the manuscript of this talk on a Wiki site http://pkeus.de/dokuwiki?id=en:start . „Wiki“ means that on this site any interested person may contribute, either by editing (registration required) or commenting (email address suffices).

Fourier Theory of Vision

In a review from 2001, Gerald Westheimer (the Fourier Theory of Vision, Perception 30, p. 532-541) wrote:

In his influential book, David Marr (1982) argued that real understanding of vision arises from the computational theory and algorithms rather than the implementation hardware.

and:

The initial phase of enthusiasm for the Fourier analysis in spatial vision in time gave way to a more reflexive tone.

and:

The attempts from Helmholtz to Luneburg, to anchor space perception to constructs derived from mathematical theory have proved elusive.

Since then, of course, some things have been evolved. J. Turski from Houston University has developed the idea of a conformal (angle preserving) camera for foveate vision. The attraction of this idea comes from the fact, that the automorphism group of the extended complex plane (Riemann sphere) is closely related to the Euclidean group in 3 dimensions.

Sampling grids, Cartesian and hexagonal, foveate graph

Only 2 regular grids possible for covering the whole plane,

- Cartesian – easy for computing

- hexagonal – better symmetry properties

Not fully regular, and with a singularity in the origin, the locations can be taken as log-polar. In this case, the number of points on each circle with center in the origin is constant.

For the foveate eye, these patterns are to be glued together, inside hexagonal, outside log-polar hexagonal. The least perturbation for the adjacencies is achieved, when a hexagon with equal side lengths is used for the origin, and this is continued as in the hexagonal log-polar pattern with 6 times of the side length angular positions to the outside. At the border, each location is moved to the mean of the adjacent locations. Every point has 6 neighbours, except the edges of the inner hexagon, where there are 5, and the outer circular border, where are 4.

A grid with hexagon side length 6 and 6 circles around:

Examples below are constructed with side length 20 and 60 circles around, for a viewing field of 10 degrees.

Alternatively, the edge points of the inside hexagon can be stripped before the construction.

The construction is essentially unique for any number of angular positions, which is divisible through 6, up to scale and rotation.

adding colour

L-cones are about 2 times more frequent than M cones. The most even distribution in the hexnet is achieved, if the net is constructed from M cones only, and a refinement with L-cones is constructed by placing one of them in the center of each M cone triangle. In this way the highest spatial frequencies carry the rd/green colour signal. The S cones are absent in the very center, so the innermost of them may be fitted near the flat sides of the inner hexagon, or if the corner points are removed, at their place.

Essential Questions

On such a grid:

- what is a line?

- what is a straight line?

- what is a circle?

- What is a right angle?

- What is a parallel?

- How are lengths compared?

- How is motion encoded?

First we replace the word „line“ by „edge“, and „circle“ by „circular disc“ to avoid discussing the line width of the drawing. Edges are now chains of strictly positive (or negative) Laplace value positions connected by neighbourhood.

The other questions can be answered best, if a Fourier transform is known. A line is straight, or a disc is circular, when the appropriate Fourier coefficients are maximal, not spreaded out. Two line segments have the same lengths, when the base frequency of their endpoints is the same.

Otherwise, a saccade in direction of a straight line should not change the line, a rotation around the center should not change the appearance of a circle, but this would be an intentional process, and could not influence optical illusions.

Perception space

The space of visual perception is not the same as the foveate eye space. It has more locations to be able to integrate the details from several fixations. As far as we know, the brain has allocated space in V1 on a logarithmic scale also. There is a difficulty in mapping the entire foveate net like ours to a flat surface without inappropriate stretching, but as the brain is split anyway, the distortions for the halves are manageable.

On the surface of the perception space, not only the luminance values, but some further dimensions like colour, velocity, and distance (derived from various sources like disparity, motion, perspective) are to be encoded.

In this talk I restrict my attention to the luminance, and, for simplicity, we take the Cartesian plane as a surrogate for the true luminance perception space, as I want to concentrate just on the effects of the higher dimension of the perception space versus the eye space.

I use complex numbers to denote the coordinates in these spaces. You may take the real and imaginary parts of these as just conventional 2dimensional coordinates. One of the reasons to use complex numbers is the complex logarithm.

Remember $ {\rm Re }\ z = |z| \cdot {\rm cos\ \phi},\ {\rm Im }\ z = |z| \cdot {\rm sin\ \phi}$.

If $z = |z| \cdot {\rm e}^{ \rm i \cdot \phi}$ then ${\rm log}(z) = {\rm log}( |z|) + {\rm i} \cdot \phi $ where ${\rm i}$ is the imaginary unit.

This transformation allows to rotate and scale via phase factor multiplication on Fourier transforms just as for translations in the original space.

Whether the luminance value itself or its Laplacian or any other reversible transform is really maintained in the brain, may be left open.

Sparse matrices, adjacency matrix

In contrast to the Cartesian grid, the 2dimensional structure of the eye space is not directly representable in matrix algebra. So all samples are put into a row vector. A similar row vector contains the coordinates of the locations. The graph structure is defined by a matrix containing only 1s at positions, where the row and columns indices belong to adjacent points. The adjacency matrix is an example of a sparse matrix, for which only nonzeroes are really stored. Other sparse matrices are diagonal and permutation matrices, and the interpolation matrices needed to deal with data on foveate graphs.

Given the dimensions of the matrices, it is crucial to use sparse matrices for storage even on 64bit computers.

From the adjacency matrix other graph operations can be derived:

- the incidence matrix containing the relations between nodes and edges of the graph,

- the triangulation matrix containing the triangles used for determining the 3 nearest neighbours in the grid for any position,

- the barycentric coordinates for these points,

- an adjacency matrix for refinement of the triangulation by adding some or all triangle midpoints.

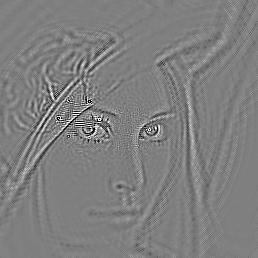

Resampling and Interpolation

For any 2 sampling grids, which are to represent a view of the same data, we need a pair of operators

which transform the view on one grid to the other, and back again, with minimal loss of information. Matlab provides a function interp2 for the transformation from any Cartesian grid to some other grid. For the inverse, a triangulation of

the source locations is used by function griddata. All methods available use only very few of the

nearest points in the other grid for interpolation, mostly 3 or 4. Therefore these transformations can be

expressed as sparse matrix operations. There is nothing wrong using Matlab’s builtins, but they have limitations, when it comes to high frequency content near the Nyquist limit. Griddata generates high frequency artifacts at the triangle borders.

What are the properties to be expected from those matrices defining the interpolation?

- row sums are 1

- all nonzero entries are positive

- second application after back transform should yield the same

In matrix language: $F\cdot B\cdot F = F$ and $B\cdot F\cdot B = B$ for forward and backward transforms. $B$ is called a pseudo-inverse of $F$.

But these requirements are both underdetermined and conflicting. Underdetermined, because any 1-to-1 mapping between subsets of both sides would do. Conflicting, because these subset permutations are the only solutions, and they are not what is needed. We are looking for solutions which render the information content as close as possible.

We take a look at a simple situation, for which the solution is known.

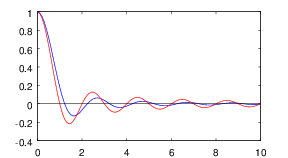

Cardinal sinus function

For data sampled at locations $x_n = n \in \mathbb{Z}$, an indefinitely differentiable function with frequency bandwidth limited to $[-\pi/2,\pi/2]$ exists on $\mathbb R$ which has value $y_n$ at $x_n$, and it is uniquely determined by

$f(t) = \sum y_n \cdot sinc(\pi \cdot(t-x_n)) $ , where $sinc(x) = sin(x) / x$ , and $sinc(0) = 1$ (l’Hospital).

See Nyquist-Shannon sampling theorem

$Sinc$ is the Fourier transform of the indicator function of the Interval $[-\pi/2,\pi/2]$ around the origin.

- the value of summand is zero whenever $t = x_m$ for $m \ne n$

- $sinc$ has some negative values

- it is slowly decaying, therefore for samples $y_n$ near the frequency limit (changing signs very often) the sum tends to diverge.

The most appropriate 2dim equivalent is a function whose frequency bandwidth is limited to a disc of radius 1 and axially symmetric. The radial part is then defined by replacing the $sin$ by Bessel $J_1$ function:

$jinc(x) = J_1(x) / x $ in mathlab $J_1(x)$ = besselj(1,x).

Structure of an interpolation matrix

For any $n\times m$ matrix $A$, a „singular value decomposition“ (SVD) is possible:

$A = U \cdot S \cdot V^* $,

where $U$ and $V$ are unitary $n\times n$ and $m\times m$ matrices, and $S$ is diagonal with nonnegative entries (singular values).

The word unitary means the extensions of the concept of orthogonality to complex matrices. A matrix is unitary iff the inverse is the conjugate transpose $U^{-1} = U^* = conj(U^T) $.

( In matlab language, ' is used for conjugate transpose, and „ .‘ “ for simple transpose.)

Given the SVD, the pseudoinverse $A^-$ of $A$ is easy to write down:

$A^- = V \cdot S^- \cdot U^*$ , where $S^-$ is the diagonal matrix containing the inverses of the positive entries of $S$.

In the $sinc$ example above we can take both $U$ and $S$ as identity matrices, and the rows of $V$ contain the shifted samples of the $sinc$-function.

For non-uniform resampling, we expect the following: the rows of $U$ and $V$ are samples of the tightest $jinc-like$ functions allowed by Shannon’s condition in the source and target grids. The singular values denote the local area distortion factors resulting from a difference in number of positive entries in the center of $jinc$.

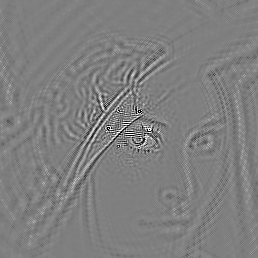

Laplace operator

In any image processing program, the discrete operator approximating the negative Laplace operator

$$\Delta = - (\partial^2/\partial x^2 + \partial^2/\partial y^2)$$

plays a major role. This is explained by the fact that only areas where it is nonzero contain information, i.e. are not entirely defined by data on the area border (Maximum principle). Marr and Hildreth used to approximate the neg-Laplace by a difference of 2 Gaussian functions (DOG) with different scales. On the discrete grids used here, it is much simpler to use a difference of the identity diagonal matrix and a suitable multiple of the adjacency matrix, such the the sum of each row is zero. Each row has to be multiplied by an area factor proportional to the size of square or hexagon to yield a constant value for any quadric function of the coordinates.

On a 2dim Cartesian grid, the matrix defining the neg-Laplace has 5 nonzeros in each row, for which 5 equations should hold:

- row sum is zero

- sum of application on horizontal coordinates is zero,

- sum of application on vertical coordinates is zero,

- sum of application on squares of both coordinates is 1,

- sum of application on difference of squares between hor. and vert. is zero.

The sparse Laplace matrix for a Cartesian grid is uniquely defined by this linear equation system. For the eye space foveate grid we use a minimum norm correction to the approximate difference of identity and adjacency matrix. In both cases it is only a low frequency approximation of the true Laplace operator, which would be computable in the Fourier basis from a diagonal matrix containing the Laplace operator eigenvalues.

return to the interpolation matrix

- We drop the requirement of nonnegative entries

- We add the requirement $\Delta_B \cdot B = B \cdot \Delta_F$ and $F \cdot \Delta_B = \Delta_F\cdot F$, where $\Delta_B$ or $\Delta_F$ is the applicable discrete Laplace for the grids,

as we want the same information being contained on both grids. Some uncertainty remains from the the fact that the Deltas are both approximations of the same idealised operator $\Delta$, but they are different approximations with different traits in symmetry and frequency responsiveness.

For the interpolation from the foveate hexagonal grid to the Cartesian grid with same resolution in the fovea center, we use $jinc$ function samples up to the 4th $jinc$ zero, where the scale of the $jinc$ is determined by requiring the immediate neighbours to be near the 1st zero. The row sums are then normalised to one. The pseudo-inverse is approximately the transpose multiplied by a diagonal matrix making row sums 1 again. This construction depends on the near-orthogonality of the $jinc$ samples.

Fourier transformation on a foveate grid

Applying Fourier transforms on Cartesian images is easy in Matlab. There is a function fft2 provided doing a

FFT on 2dim data, and a function fftshift, which moves the zero in space and frequency to the center of the rectangle, which can be combined in a custom function fft2s.

As the foveate space has much lower dimension than the Cartesian space, the Fourier transform of the foveate space is part of a subspace with equal dimension of the full FFT in Cartesian space, but it is not

obvious how to specify it. High frequency data con only be located near the center. The original location is recoded in the Fourier transform into the change rate of the phase factors. So, high frequencies have slowly changing phase and amplitude. Therefore the Fourier transform can be interpolated from a foveate sample of the full transform. For simplicity we try the same interpolation matrix as in the original space.

As the foveate space has lower dimension, other approaches are possible also, like directly using the Fourier matrix. Care has to be taken to eliminate those entries connecting high frequencies and excentric locations, which are not allowed by Shannon’s condition.

Whereas the Laplace operator, being locally bounded, may easily be implemented by a neural network, for the Fourier transform, where any frequency is connected with most locations with equal weight, only different phase, this is not obvious, and the idea that a Fourier transform happens in the visual system, seems to have been dropped. At this point, we need the Fourier transformation mostly for technical reasons. We have to do translations of an image to any target. For computation it is the most flexible and economical way. If the physiological system can accomplish the tasks needed without Fourier transform, we take it only as a computational shortcut.

Saccadic integration

We are now able to explain how the integration of the foveate data from new fixation after a saccade with data collected before may work:

- the perception space is translated to the center of the new fixation (anticipation of saccade)

- in case of moving images the effect of movements is anticipated for the new time

- the difference of the downsampling of this to foveate space and the new data is formed

- the difference is interpolated to perception space again and added there

The question arises, how from these operations which are all looking linear, a clearly nonlinear

effect like the scintillating grid can be explained. Neither the plain Hermann grid nor the disks alone show the behaviour of scintillating, only their additive combination does.

When the density of cones is low, then in the neighbourhood of a crossing only one cone may be responsible for the perception of the crossing, reporting one of the possible 3 luminance values, which either is the anticipated value there

or sometimes the opposite. Together with the long-reaching interpolation property of the grid (see $sinc$-function) resonance effects are possible now.

It is unlikely that the appearance of the black discs in the scintillating grid is depending on what is seen at the new fixation, as the shape and colour of the effect are not effected by changing the scale. So it can only be caused by the defoveation itself. Only the points where the illusion does not appear, are defined by being in the fovea now and the fixations before.

Establishing the Geometry

Since the end of 19th century (Felix Klein, Erlangen program) geometry is seen as a property of certain transformation groups on the space. On real 3dimensional space we have the affine and projective transformation groups, and, most importantly for the physical world, the Euclidean transformations generated by translations and rotations. If we add the time dimension, the 6-parametric Euclidean group is embedded into the Galilean group containing the uniform movements of rigid bodies.

The groups of the physical world should be homomorphically mapped to the perception space to establish there a geometry consistent with the physics of the outer world. It happens that Turski´s conformal camera fits this demand to a great deal.

The most simple subcase are the translations in the image plane. Any small saccade causes a small translation in the image plane. Whatever the way, how the brain computes its predictor, it is a perfect feedback for adjusting any parameters to those of the real world. And, at least for the 2-dimensional translation subgroup, being commutative, a Fourier transform is indeed feasible.

stereographic projection of the Riemann sphere

When the complex plane is extended with one additional point $\infty$, it is compactified and made topologically a sphere, due to B. Riemann. The connection between the sphere and the plane is defined by the stereographic projection. The automorphism group of the Riemann sphere is well studied, and known as Möbius group. It consists of transformations of the form $(\alpha\cdot z + \beta) / ( \gamma\cdot z + \delta)$ under the restriction $\alpha \cdot \delta - \beta\cdot \gamma = 1$ with complex valued parameters $\alpha$ to $\delta$. So there are 6 free real parameters, just as in the 3dim Euclidean group.

BTW, the number 6 happens to return as the number of layers in CGL.

Indeed, these automorphisms show up in the stereographic projection as Euclidean movements of the plane against the sphere. The main difference between these groups is the fact, that the translation in the 3rd is changed to scaling by the logarithm, and restricted to a half-space.

Schrödinger Equation of the Harmonical Oscillator

A very famous differential equation:

$$ {-\mathrm i\ \cdot\ \mathrm d}/{\rm d}t\ \Psi = \Delta \Psi + |x|^2 \cdot \Psi $$

describes the behaviour of a harmonic oscillator in quantum mechanics. The interesting point is, that at $t = \pi/2$, $\Psi(x,t)$ is transformed to its Fourier transform, and, at $t = \pi$, to $ \Psi(-x,t+\pi)$. Remarkably, both operators on the right side are local operators. This can be used to implement a Fourier transform consistent with the restrictions on physiological systems.

Obviously, any computational solution of the differential equation is not exact. Are there ways to approximate the exact Fourier transform by iteration? At a first step, the differential equation is approximated in time by small increments of, say, $\pi/10 000$ time units. After 10000 steps the data should be reversed in space with some error. Half of the error difference can be fed back 5000 steps, Adding this with the correct sign the value at $\pi/2$, which is the Fourier transform, will be improved. Perhaps this is what is happening on the pathway between V1 and CGL (corpus geniculatum laterale).

Encoding Motion

In quantum mechanics, the wave function encodes the full state of the particle it describes; that is, both position and momentum (velocity). They are measured by applying the position and momentum operators on the wave function. Analogously we can expect to get the position of (a thing within an image) by computing $\int \Psi^* \cdot x \cdot \Psi$ and the velocity by ${\rm i}\cdot\int \Psi^* \cdot \partial/\partial x \Psi$ , provided we find a suitable $\Psi$-function.

As a starting point, we compute the time-derivative of a raster image moving with constant velocity in horizontal direction. Obviously, the lines can be treated separately, but for this derivation the function needs to be interpolated between the raster points. The optimal bandwidth limited interpolation uses the cardinal sinus function. The derivative at the raster points therefore is computed as the convolution of of the alternating harmonic series and its negative in the positive vs. negative direction in each line with the samples at these points.

Conclusions

- There is a need for Fourier transform (straightness detection).

- A Fourier transform is feasible under reasonable restrictions.

- There are signs that a Fourier transform is happening (Hermann grid).

- The geometry of perception space is linked to that of the physical world by (eye) movementsi (Turski).

Matlab functions and scripts

Aftermath

I remember 3 questions from the discussion after the talk:

- Is there a lighter tool than Schroedinger’s equation to get a Fourier transform done?

- What is the relation to the generally accepted receptive fields?

- Is the linear ansatz compatible with known nonlinearity in the vision system?

Schroedinger

First, the equation as such is not very complicated, because it is a linear PDE. Any operator used to construct a solution in this way has to commute with the FT and have the natural numbers as eigenvalues. So probably no other solutions exist. The QM harmonic oscillator is well known and studied, mostly the second simplest example in QM textbooks (after the free particle).

What makes the name of Schroedinger important in quantum physics is the fact, that Schroedinger applied the equation to describe a the dynamics of a single particle. This is a different situation than in image processing, where the wave equation probably could be intrinsically motivated.

In further research I am going to apply the analogy to QM for the handling of motion, so I feel citing Schroedinger’s name we be more justified in the future.

Receptive fields

The receptive fields found by Hubel and Wiesel are accepted to so some sort of local Fourier analysis by Gabor wavelets. But a global FT as postulated in my talk has been sorted out at that time.

But how does the brain translate the receptive field content to the new location after a saccade, given the constraint that sampling is non-uniform? BTW, even a translation by shift, if it were uniform, seems not feasible, as all sizes of saccades have to be handled.

I find it algorithmically easier that the receptive fields may contain a Wigner distribution developed from a FT.

Nonlinearity

Of course, higher processes in vision need nonlinearities (see Bayes priming), but in the low vision processing, it seems important that any transform can be verified to do exactly what it is intended to do. In electrical engineering, it is common to use negative feedback from linear passive elements such as a resistor network to cancel the effects of nonlinearity in active elements of an amplifier. I have long sought a way to apply negative feedback in vision. Therefore I was really excited when I first heard about the existence of microsaccades. This was just the missing link between the exactness needed for the working of FT and the limited facilities in a physiological system like a neural net.

The fact that the scintillating grid is a strong illusion dependent on the concepts of periodicity and straightness of the lanes, and not part of any higher (such as decision) process, is proof that these concepts are realised in a very basic level of the vision system.

More about the role of nonlinearities in illusory contours.